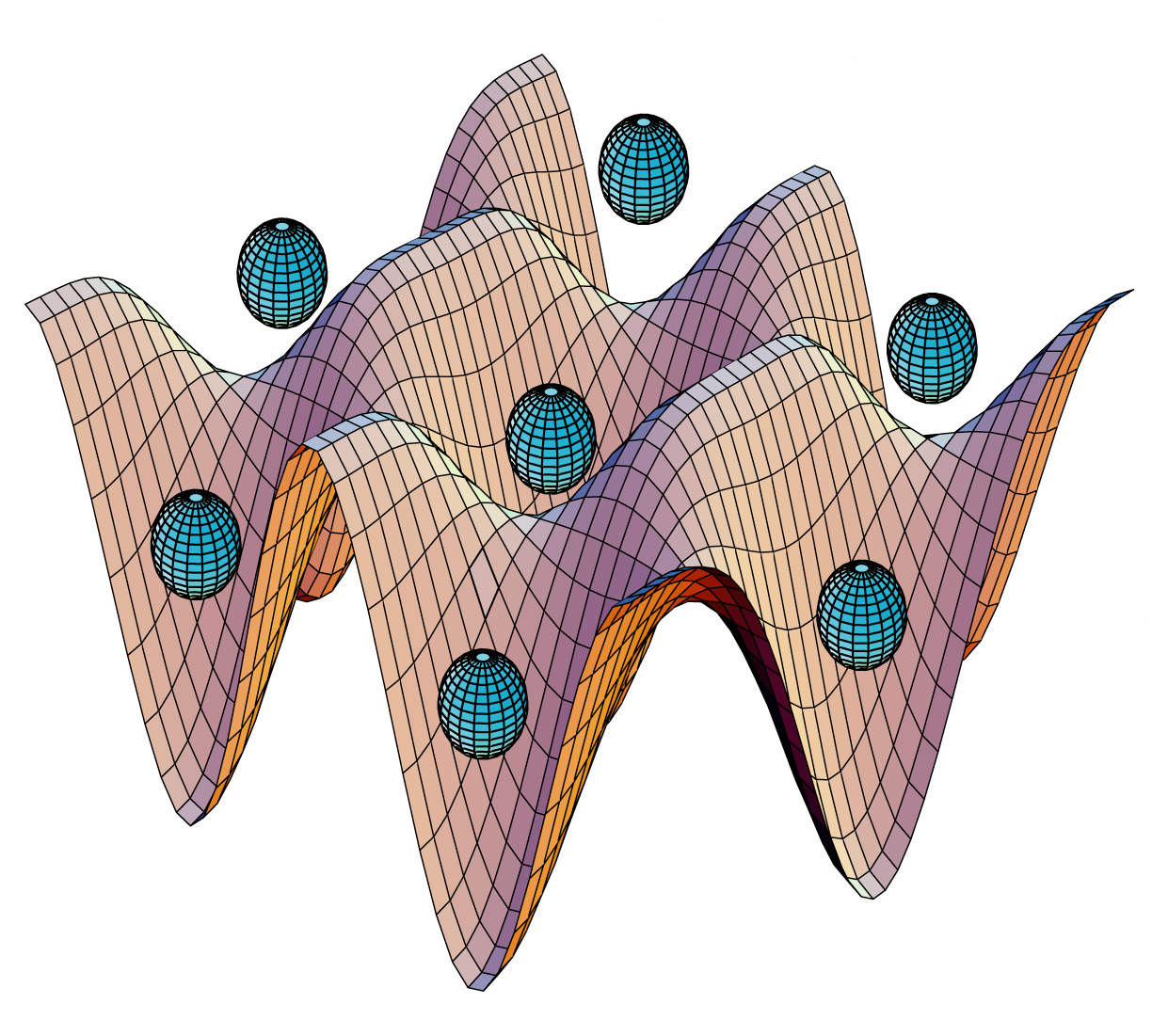

In the beginning was the vacuum. And nature abhorred the vacuum, filling it with topology. The surprising connection between quantum field theory (QFT) and topology yields the instanton, a cousin of the magnetic monopole; a soliton. The U(1) problem in QCD; where strange, up and down quarks of identical mass give the Lagrangian an extra U(3) symmetry, a product of SU(3)xU(1). Removing all mass from the quarks generates an additional copy of U(3). The Eightfold Way of strongly interacting particles and the mesons generated from the spontaneous breaking make up the pair of SU(3) groups. Conservation of baryon number is indicated by one U(1) segment, but the last additional U(1) group necessitates particles that don't even exist; nonetheless the chiral symmetry is spontaneously broken; but how? The solution is the insanton. But what topological features make instantons relevant to the QCD vacuum? Imagine a topologist mowing her lawn with an electric mower; she subsequently faces complications as she tries to move the power-cord around trees and shrubs. An intuitive analogy for homotopy. She then gives each tree a 'winding number' of 1 for one circuit around the tree, 2 for two circuits, etc. Topologically, both a short or wide path around a tree shrub are equivalent to each other; hence they are homotopic as they can be deformed into one another without the need for cutting. But if she then moves the mower in a circular fashion back to her initial starting position, the paths might look as though nothing has changed but they have a subtle difference; one wind around a tree. An analogous example of how the non-trivial topology of a vacuum can have physical effects is the Aharanov-Bohm effect. If you split an electron in a way that it passes both ways around a solenoid carrying current and subsequently recombines, the outcome is an interference pattern which changes. Thus, the topology of a vacuum without a magnetic field is equivalent to a punctured plane (the puncture made by the solenoid). Now we can pump an extra gauge invariant term (the instanton) into the Lagrangian, in QCD this is essentially a gluonic entity; a ripple in a gluon field. If you take the initial and final field strengths, in the middle is a local region where there is some positive energy; this is the instanton. Instantons have a 'topological charge' which explains their stability, much like an A4 sheet of paper taped at both ends to a coffee table with a single 180 degree twist induced before the ends are secured. This part of the sheet will remain twisted until someone cuts the strip of tape, this is just like the topological charge.

In the beginning was the vacuum. And nature abhorred the vacuum, filling it with topology. The surprising connection between quantum field theory (QFT) and topology yields the instanton, a cousin of the magnetic monopole; a soliton. The U(1) problem in QCD; where strange, up and down quarks of identical mass give the Lagrangian an extra U(3) symmetry, a product of SU(3)xU(1). Removing all mass from the quarks generates an additional copy of U(3). The Eightfold Way of strongly interacting particles and the mesons generated from the spontaneous breaking make up the pair of SU(3) groups. Conservation of baryon number is indicated by one U(1) segment, but the last additional U(1) group necessitates particles that don't even exist; nonetheless the chiral symmetry is spontaneously broken; but how? The solution is the insanton. But what topological features make instantons relevant to the QCD vacuum? Imagine a topologist mowing her lawn with an electric mower; she subsequently faces complications as she tries to move the power-cord around trees and shrubs. An intuitive analogy for homotopy. She then gives each tree a 'winding number' of 1 for one circuit around the tree, 2 for two circuits, etc. Topologically, both a short or wide path around a tree shrub are equivalent to each other; hence they are homotopic as they can be deformed into one another without the need for cutting. But if she then moves the mower in a circular fashion back to her initial starting position, the paths might look as though nothing has changed but they have a subtle difference; one wind around a tree. An analogous example of how the non-trivial topology of a vacuum can have physical effects is the Aharanov-Bohm effect. If you split an electron in a way that it passes both ways around a solenoid carrying current and subsequently recombines, the outcome is an interference pattern which changes. Thus, the topology of a vacuum without a magnetic field is equivalent to a punctured plane (the puncture made by the solenoid). Now we can pump an extra gauge invariant term (the instanton) into the Lagrangian, in QCD this is essentially a gluonic entity; a ripple in a gluon field. If you take the initial and final field strengths, in the middle is a local region where there is some positive energy; this is the instanton. Instantons have a 'topological charge' which explains their stability, much like an A4 sheet of paper taped at both ends to a coffee table with a single 180 degree twist induced before the ends are secured. This part of the sheet will remain twisted until someone cuts the strip of tape, this is just like the topological charge.In QFT, the initial and final state is the vacuum. We can envision an instanton as a sort of path that correlates or links initial and final states (that have different topological winding numbers) and since those winding numbers can be infinite in extent, the vacuum not only becomes a state of lowest energy but also an aggregation of an infinite number of apparently homogeneous yet topologically different vacua. The lawn-mowing analogy is helpful as the power-lead leading over the tops of trees and shrubs act as barriers to movement of the electric lawn-mower. In field theory, this is equivalent to an energy barrier, instantons surpass this barrier via quantum tunnelling (linking one distinct topological state to another, measured by the θ-parameter. But how do instantons solve the U(1) problem? We could just invoke a respectable particle to account for the symmetry breaking like the η meson; but it's a Goldstone boson and the particle with the next mass up is too heavy. Instantons, like goldilocks, give just the right symmetry disturbance. A massless spiral of gluons and inverts right-handed quarks to left-handed ones. Such an inversion of handedness breaks the chiral symmetry and deals with the additional U(1) symmetry without the need for particles.