Cool things happen when you cool liquid helium to 2.2 K. Below this temperature (lambda point), superfluidity takes over and viscosity decreases radically, resulting in a frictionless flow. Place it in a container and it will flow into a thin film up around its edges and flow through the pores of the walls. This anomaly is difficult to understand using classical fluid mechanics; Poiseuille's law dictates that the flow rate of a fluid corresponds to the difference across the capillary and to the fourth power of the capillary radius. But below the lambda point, the flow rate of supercooled liquid helium was not only high, it was both independent of both the capillary radius and pressure; evidently, this is not within the explanatory scope of classical theory. When cooling liquid helium (by pumping out its vapour), the liquid ceases to boil due to fact that the thermal conductivity of liquid helium has so sharply increases to maintain a homogeneous temperature. Let's start our exploration from the ground up: the reason liquid helium never solidifies no matter how cold you cool it is because the weak Van der Waals forces between the atoms are adequate enough to overpower the zero-point motion related with attempting to restrain a helium atom to a site on the lattice. The nature of the superfluid is therefore necessarily quantum mechanical. London suggested superfluidity as an expression of a Bose-Einstein condensate (BEC) but the issue is that BECs happen in ideal gases where particles have no interaction with each other whereas helium atoms attract weakly at a distance and repel strongly when close. Feynman's path integral approach lead to the realisation of two important yet subtle notions, firstly, helium atoms are bosons and this means that a Bose symmetry ensures the wave-function is not affected by any two helium atoms changing their configuration. And secondly, if an atom is moving slowly along its trajectory, the adjacent atoms would have to move slowly to get out of the way, this act of 'making room' increases the kinetic energy of the helium atoms that would add to the action. The overall effect is that we must change what we usually perceive as the mass of the helium atom, because when it moved, more than one atoms would have to make way (as mentioned), hence the trajectories that give the most 'sum over paths', there would be a particle with a somewhat increased mass. But what keeps a superfluid helium superfluid? Landau suggested that there are no more available low energy states near the coherent BEC state at low temperatures that any fluctuations could place the quantum fluid into. A classical fluid has viscosity (resistance to flow) because separate atoms bounce around other atoms and molecules and any debris in the container; these excitations alter the motion of the particles and dissipate energy from the fluid to the container, but if no more states are there to be filled (as in Landau's suggestion), particles can't alter their motion and persist to flow without dissipating energy. Feynman wanted to extrapolate this to a quantum mechanical regime, essentially because helium atoms repel each other at short distances, the ground state (lowest energy) of the liquid will be of a roughly uniform density. You can imagine each atom in the system as confined to a 'cage' formed by the surrounding atoms, thus in high densities, the cage enclosing the atom would be smaller. The Uncertainty principle teaches us that as a result of confining the atom to a smaller space, its energy is raised; so the ground state is achieved when all atoms are as far apart from each other as possible.

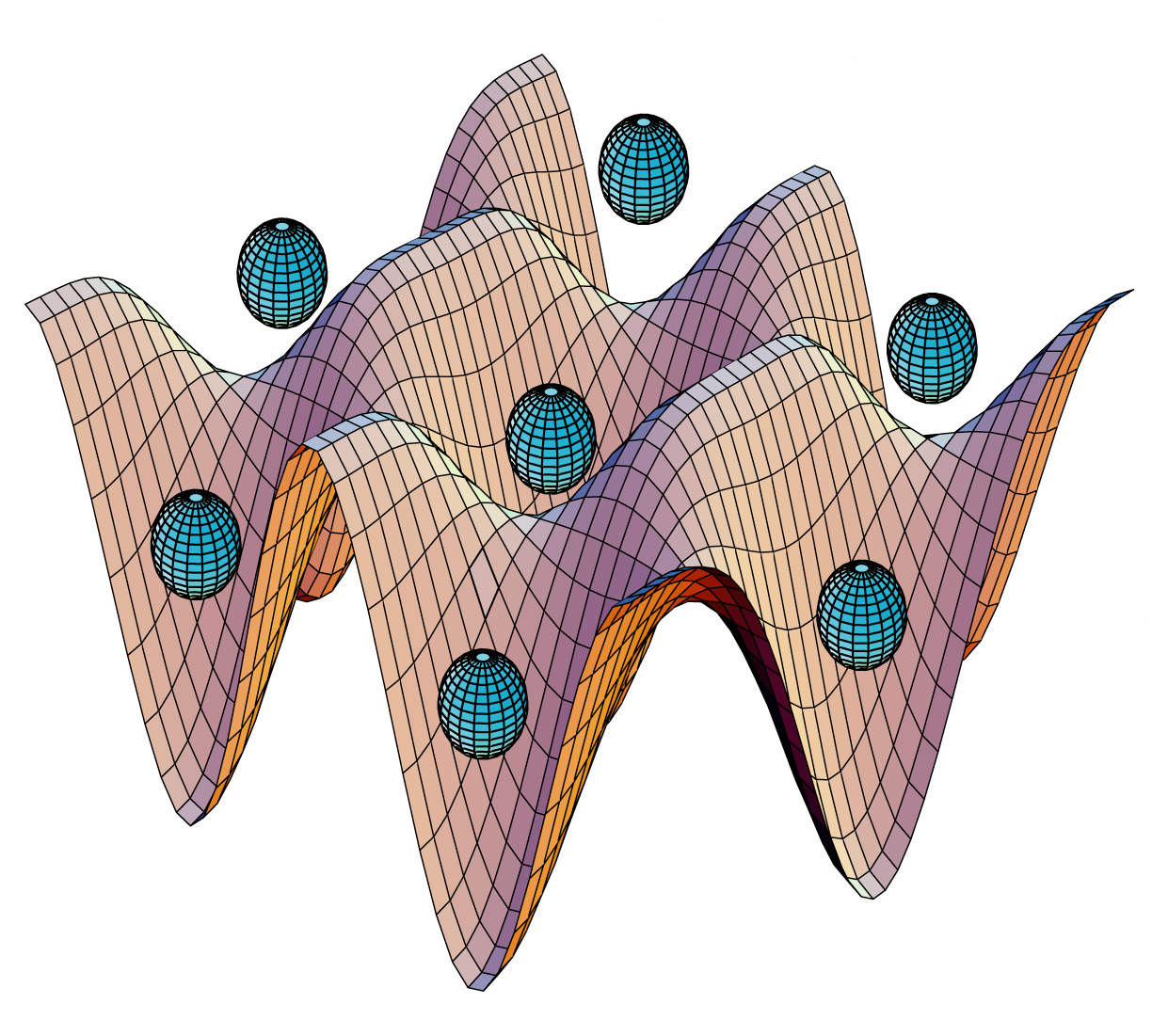

Cool things happen when you cool liquid helium to 2.2 K. Below this temperature (lambda point), superfluidity takes over and viscosity decreases radically, resulting in a frictionless flow. Place it in a container and it will flow into a thin film up around its edges and flow through the pores of the walls. This anomaly is difficult to understand using classical fluid mechanics; Poiseuille's law dictates that the flow rate of a fluid corresponds to the difference across the capillary and to the fourth power of the capillary radius. But below the lambda point, the flow rate of supercooled liquid helium was not only high, it was both independent of both the capillary radius and pressure; evidently, this is not within the explanatory scope of classical theory. When cooling liquid helium (by pumping out its vapour), the liquid ceases to boil due to fact that the thermal conductivity of liquid helium has so sharply increases to maintain a homogeneous temperature. Let's start our exploration from the ground up: the reason liquid helium never solidifies no matter how cold you cool it is because the weak Van der Waals forces between the atoms are adequate enough to overpower the zero-point motion related with attempting to restrain a helium atom to a site on the lattice. The nature of the superfluid is therefore necessarily quantum mechanical. London suggested superfluidity as an expression of a Bose-Einstein condensate (BEC) but the issue is that BECs happen in ideal gases where particles have no interaction with each other whereas helium atoms attract weakly at a distance and repel strongly when close. Feynman's path integral approach lead to the realisation of two important yet subtle notions, firstly, helium atoms are bosons and this means that a Bose symmetry ensures the wave-function is not affected by any two helium atoms changing their configuration. And secondly, if an atom is moving slowly along its trajectory, the adjacent atoms would have to move slowly to get out of the way, this act of 'making room' increases the kinetic energy of the helium atoms that would add to the action. The overall effect is that we must change what we usually perceive as the mass of the helium atom, because when it moved, more than one atoms would have to make way (as mentioned), hence the trajectories that give the most 'sum over paths', there would be a particle with a somewhat increased mass. But what keeps a superfluid helium superfluid? Landau suggested that there are no more available low energy states near the coherent BEC state at low temperatures that any fluctuations could place the quantum fluid into. A classical fluid has viscosity (resistance to flow) because separate atoms bounce around other atoms and molecules and any debris in the container; these excitations alter the motion of the particles and dissipate energy from the fluid to the container, but if no more states are there to be filled (as in Landau's suggestion), particles can't alter their motion and persist to flow without dissipating energy. Feynman wanted to extrapolate this to a quantum mechanical regime, essentially because helium atoms repel each other at short distances, the ground state (lowest energy) of the liquid will be of a roughly uniform density. You can imagine each atom in the system as confined to a 'cage' formed by the surrounding atoms, thus in high densities, the cage enclosing the atom would be smaller. The Uncertainty principle teaches us that as a result of confining the atom to a smaller space, its energy is raised; so the ground state is achieved when all atoms are as far apart from each other as possible.Imagine a ground state configuration with uniform density and envision that we can create a state that differs from it, but only over large regions, so any 'wiggles' in the wave-function will not be closely arranged (a requirement of the Uncertainty principle). Now taking one atom a distance away to a new position will leave the system invariant due to the Bose symmetry, so the wave-function does not represent atomic displacements. This can be interpreted as the biggest extra wiggles in the wave-function to describe a new state can't be larger than the average space between the individual atoms. Since wiggles of this magnitude conform to excited energy states, they are higher than the random thermal perturbations that could produce at 2.2 K or below. Therefore, this hints that fact that there are no low-hanging energy states above the ground state that could be readily accessed by particle motion, so as to act as a resistance to current flow. Like superconductivity, the 'superflow' would continue provided the total energy of the system was lower than the 'energy gap' between the ground state and the lowest-energy excited state. Tizsa proposed a 'two-fluid' model where at absolute zero, all of the liquid helium would enter the superfluid state and as fluid gained adequate heat, excitations would dissipate energy and the normal portion would permeate the whole volume. But what would happen to a container or bucket or superfluid if one spun it around? Due to the configuration of the ground state and the energy needed for excitations above it, the superfluid had to have no rotation. And what about making the entire fluid rotate by spinning its container? Feynman suggested that small regions on the order of several atoms would rotate around a pivot, these pivots or central regions would form so called vortex lines (which tangle and twist around each other). Such vortices don't need to extend from the container top to the bottom but may form rings; this also equates to the minimum energy of a roton (lowest-energy excitations) where the roton is a local domain moving at a different speed to the background fluid. And hence, for the quantum behaviour of the angular momentum to still apply, the fluid needs to flow back somewhere else again like a vortex.